Toward A Generic Federated Learning Platform Optimized For Computer Vision Applications

Abstract

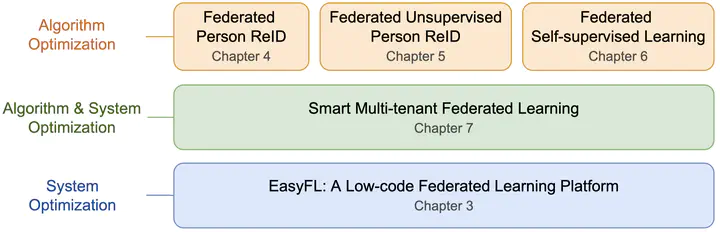

As a result of advances in deep learning, computer vision has transformed many industries with a wide range of applications. The majority of these applications heavily rely on centralizing plenty of images to train deep neural networks. However, this centralized training approach is becoming infeasible due to the increasingly stringent data privacy regulations, especially for privacy-sensitive computer vision applications like face recognition and person re-identification that contain sensitive personal information in training images. Federated learning (FL) is an emerging distributed training paradigm that preserves data privacy via in-situ model training on decentralized data. Implementing FL to computer vision applications, however, is not optimized in terms of the performance of the system and specific computer vision applications. In this thesis, we aim to optimize the FL platform for computer vision applications via system and algorithm optimizations. Firstly, we perform system optimization of FL systems and develop the first low-code FL platform, EasyFL, to improve researchers' productivity and efficiency in implementing new federated computer vision applications. We achieve this goal while ensuring great flexibility for customization by unifying simple API design, modular design, and granular training flow abstraction. Our evaluation demonstrates that EasyFL allows users to write less code and our distributed training optimization effectively accelerates training speed by 1.5 times. Built on EasyFL, we optimize algorithms for the following three computer vision applications: person re-identification (ReID), unsupervised person ReID, and self-supervised learning. We propose federated person ReID (FedReID), a new paradigm of person ReID training, and investigate its performance under statistical heterogeneity via benchmark analysis. Based on the insights from these analysis, we propose three optimization methods to elevate performance: client clustering, knowledge distillation, and weight adjustment. Extensive experiments demonstrate that these approaches achieve satisfying convergence with much better performance on all datasets. We further introduce the first federated unsupervised person ReID system (FedUReID), which learns person ReID models from decentralized edges without any labels. We elevate its performance via edge-cloud joint optimization. As a result, our methods not only achieve higher accuracy but also reduce computation costs by 29%. Next, we advance self-supervised visual representation learning to learn from decentralized data by proposing the federated self-supervised learning (FedSSL) framework. Based on the framework, we conduct empirical studies to uncover in-depth insights into essential and useful fundamental building blocks in FedSSL . Inspired by these insights, we introduce a new approach to tackle the divergence issue caused by statistical heterogeneity. Extensive empirical studies demonstrate that our proposed approach is superior to existing methods in a wide range of settings. Moving forward from optimizing a single application, FL system could have multiple simultaneous training activities for multiple applications in real-world scenarios, such as autonomous vehicles. We design a smart multi-tenant FL system that effectively coordinates and executes multiple simultaneous FL training activities. We first formalize the problem of multi-tenant FL, define multi-tenant FL scenarios, and introduce a vanilla multi-tenant FL system that trains activities sequentially to form baselines. Then, we propose two approaches to optimize multi-tenant FL by considering both synergies and differences among these training activities. Extensive experiments demonstrate that our method outperforms other methods while consuming 40% less energy. We believe that our optimization approaches and insights will inspire practitioners to further explore the implementation and optimization of federated computer vision applications.